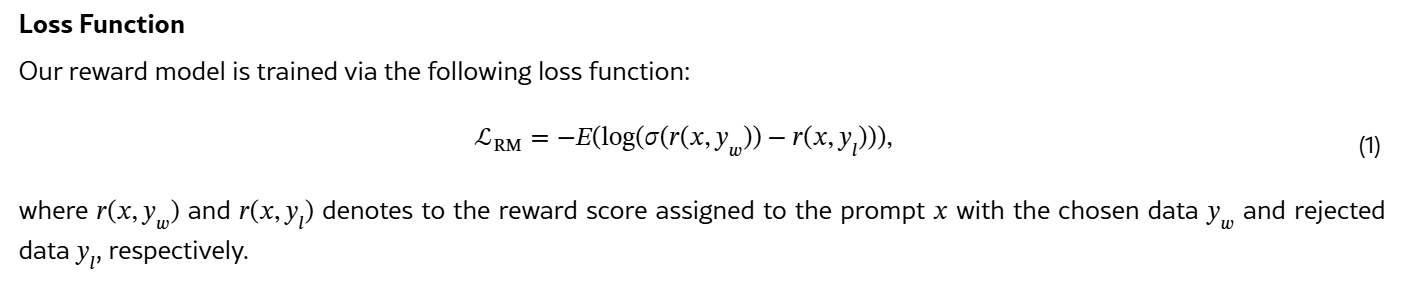

3IXC2.5-RewardData PreparationReward models are trained using pairwise preference annotations (e.g., prompts x with chosen responses yc and rejected responses yr) that reflect human preferences. While existing public preference data is primarily textual, with limited image and scarce video examples, we train IXC-2.5-Reward using both open-source data and a newly collected dataset to ensure broad..