https://arxiv.org/pdf/2410.06154

In this work, we propose a novel method (GLOV) enabling Large Language Models (LLMs) to act as implicit Optimizers for Vision-Language Models (VLMs) to enhance downstream vision tasks.

Our GLOV meta-prompts an LLM with the downstream task description, querying it for suitable VLM prompts (e.g., for zeroshot classification with CLIP).

These prompts are ranked according to their fitness for the downstream vision task.

In each respective optimization step, the ranked prompts are fed as in-context examples (with their accuracies) to equip the LLM with the knowledge of the type of prompts preferred by the downstream VLM.

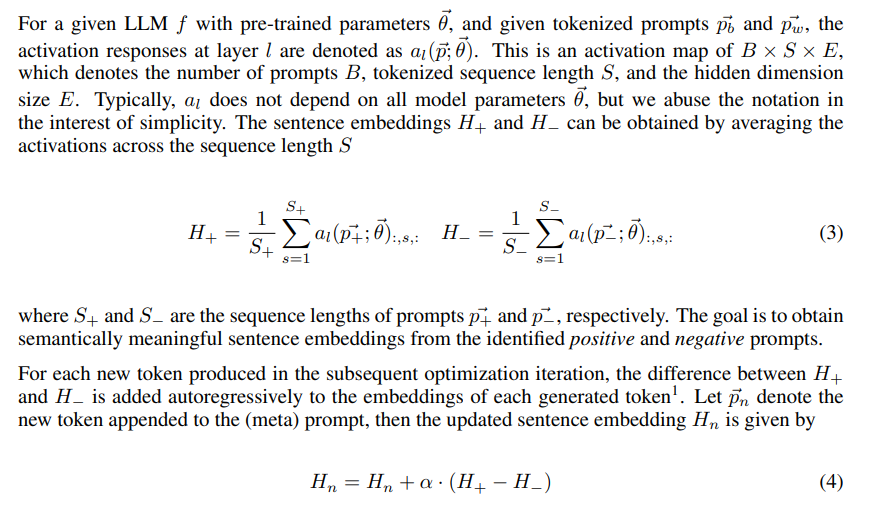

Furthermore, we also explicitly steer the LLM generation in each optimization step by specifically adding an offset difference vector of the embeddings from the positive and negative solutions found by the LLM, in previous optimization steps, to the intermediate layer of the network for the next generation step.

This offset vector steers the LLM generation toward the type of language preferred by the downstream VLM, resulting in enhanced performance on the downstream vision tasks.

We comprehensively evaluate our GLOV on 16 diverse datasets using two families of VLMs, i.e., dual-encoder (e.g., CLIP) and encoder-decoder (e.g., LLaVa) models – showing that the discovered solutions can enhance the recognition performance by up to 15.0% and 57.5% (3.8% and 21.6% on average) for these models

3 GLOV: GUIDED LLMS AS IMPLICIT OPTIMIZERS FOR VLMS

The goal of our GLOV is to improve the VLM’s downstream (vision) task performance by optimizing natural language prompts through employing an LLM in an iterative workflow.

To achieve this, we build upon a meta-prompt introduced by Mirza et al. (2024), differing from them, we leverage few-shot (e.g., 1-shot) held-out labeled training examples to calculate the effectiveness of the solutions discovered in each optimization step, which guides the optimization.

Furthermore, effective prompt optimization is performed by providing the LLM with explicit guidance conditioned on a prior of the difference of the sentence embeddings from the positive and negative prompts discovered during the previous optimization iterations.

Although the application space of GLOV is general and we demonstrate its generalization ability on two popular families of VLMs (e.g., dualencoder (Radford et al., 2021) and encoder-decoder (Liu et al., 2023)), for simplicity, here we focus our description around CLIP (Radford et al., 2021) while mentioning the differences for LLaVa (Li et al., 2024) where appropriate.

An overview of our methodology is provided in Figure 2. For the ease of assimilation, we divide the description of our GLOV into different parts.

In Section 3.1 we describe the fitness function and how it can provide an interface for the LLM-VLM interaction.

In Section 3.2, we provide details about the meta-prompt employed in our work.

Finally, we conclude in Section 3.3 by providing details about the proposed guidance methodology.

3.1 LLM-VLM INTERACTION THROUGH FITNESS FUNCTION

The dataset-specific prompt templates P provided by CLIP (Radford et al., 2021) have been constructed manually, requiring human effort.

In this work, we frame the prompt search as an optimization problem and propose to replace the human with an LLM, employed in an iterative feedback loop.

Furthermore, we explicitly guide the generation process of the LLM in each optimization step by proposing a novel guidance methodology that can assist the LLM in understanding the style of language preferred by the downstream VLM, even though the two models only interact through a fitness function.

At each optimization step i, the LLM provides multiple (e.g., 10) solutions to improve the downstream task performance.

However, not all solutions provided by the LLM are preferred for the downstream vision task.

To obtain a measure of the fitness (effectiveness) of the provided solutions to the downstream vision task, we evaluate all the candidate solutions on a held-out fewshot (1-shot) labeled training dataset D.

For CLIP (Radford et al., 2021), the zero-shot likelihood of class cˆ for each discovered prompt p ∈ P during an optimization step can be found by

where 1 is an indicator function that is 1 if the predicted label matches the ground truth y and 0 otherwise. For the encoder-decoder models (e.g., LLaVa), which produce open-ended outputs, we obtain the class likelihoods by obtaining a symbolic representation of the image in textual form and comparing the text embeddings from this symbolic representation with the text embeddings obtained for the individual class names, using a dedicated sentence embedding model (Reimers & Gurevych, 2019). We expand on these details in the Appendix Section A. It is important to note that the fitness function forms a bridge between two disjoint models – the LLM and the VLM, and is responsible for their interaction. The fitness (classification accuracy) provides feedback to the LLM regarding the type of natural language sentences that are preferred by the downstream VLM. The fitness function is responsible for ranking all the prompts provided as in-context examples to the meta-prompt in each optimization iteration (c.f., Section 3.2) and also forms the basis for the application of the embedding-space guidance methodology (c.f., Section 3.3) proposed in this work to bias the LLM responses towards a notion of goodness

3.2 META-PROMPT The meta-prompt (c.f., Appendix Figure 5) is responsible for driving the iterative prompt optimization. Specifically, it consists of 3 distinct parts, which are described as follows: System prompt is a generic set of instructions that describe the task of the LLM. It helps a user to find the optimal prompts, improving the downstream task accuracy. The system prompt remains static for the entire optimization run. Task description is dynamically changing at each optimization step. It consists of a description of what is included in the main body of the prompt, e.g., what is expected from the LLM (i.e., a prompt), a downstream task name, task description, quantity (and actual) best and worst prompt templates as in-context examples, with their associated fitness, obtained through equation 2. In-context examples serve to bootstrap the LLM to the type of output that is expected. In each optimization step, we task the LLM to provide us with 10 candidate solutions (prompts). Each prompt is evaluated w.r.t. a fitness function to obtain a (classification) score. We keep a global history of the prompts (and associated fitness) generated during all the previous optimization steps and at each optimization step i, the newly generated prompts and all the previous prompts are ranked according to their respective fitness score. For the next optimization step i + 1, topk, and bottomk prompts (we choose k = 5) are selected as in-context demonstrations and plugged into the metaprompt together with their respective accuracies. The intuition behind keeping a global history of prompts is to provide the LLMs with long-term knowledge about what types of prompts have been effective for the VLM so that it can model its responses according to them

3.3 STEERING THE LLM GENERATION PROCESS At a higher level, given two prompts – positive and negative (identified through equation 2), our proposed steering can be considered as analogous to computing a hidden state gradient towards the positive prompt, effectively biasing the language generation away from the negative identified prompt in each optimization step. The intuition is to condition the LLM text outputs according to the language preferred by the downstream VLM. To this end, we show that the LLM outputs can be steered through simple arithmetic in the hidden states of the present-day LLMs.

where α is the scaling factor and is chosen via grid search.

This process is repeated until the maximum number of tokens is achieved for each prompt.

In total we prompt the LLM (at each iteration) to provide us with 10 prompt templates for CLIP and 5 for LLaVa to reduce the computation efforts.

In an optimization run p+ is always the prompt with the best accuracy w.r.t the fitness and p− is set to be the prompt with the second-best accuracy.

Since, we compute a form of the gradient-like differential between averages of token hidden states, intuitively trying to identify a characteristic of task-specific improvement.

Thus, the intuition behind computing the differential between the best and the second best (in terms of fitness) is to make it between points closest to the maximal value of the objective – which is a common mathematical intuition.

Furthermore, p+ and p− are only updated when a new prompt with higher accuracy is found.

This ensures that the guidance signal does not alter in each iteration, resulting in more stable optimization.

An important design choice in GLOV is the method adopted to calculate the sentence embeddings.

Some works, e.g., Jiang et al. (2023) hint that the decoder-based LLMs are not suitable for obtaining the sentence embeddings.

We ablate our proposed method of obtaining sentence embeddings (equation 3) in Section 4.3 and find it to provide strong results (while linear probing the embeddings from the middle layers of the LLM) on common natural language classification tasks, hinting that our sentence embeddings can capture semantically meaningful information from the prompts.