https://arxiv.org/html/2411.14432v1#S3

간단하게 두개의 MLLM 사용 reasoning, summarization

To fully leverage the reasoning capabilities of MLLMs, we propose Insight-V, a novel system comprising two MLLMs dedicated to reasoning and summarization, respectively.

reasoning model - detailed reasoning process 생성

summary model - reasoning을 supplementray info 보조적인 정보로 사용해 정답에 대한 relevance utilility 를 평가

3.2 Construction of Structured Reasoning Data

Progressive Long-Chain Reasoning Data Generation

각각의 input query 마다 structured reasoning process를 json format으로 생성

각각의 생성된 스텝마다 reasoning generator provides a brief summary of the current step, a detailed reasoning response, and an action for the following step

if action = continue

추가 reasoning step 생성

if action = summary

지금까지의 reasoning에 대한 summary 와 최종 답 생성

Multi-Granularity Assessment.

After obtaining the structured responses, we utilize an assessment pipeline to ensure data quality

답변 필터링으로 강한 LLM 사용

Once responses with incorrect answers are filtered out, the remaining reasoning processes are passed to a reasoning path scoring agent

이를 위해서는 강력한 MLLM 사용 (input : image, question, reasoning path, and ground truth answer)

------->

The scoring agent assesses each response based on the step-by-step accuracy of the reasoning path and the level of detail in the reasoning.

다른 데이터 샘플마다의 consistency 유지위해

we aggregate all responses for each question and process them in a single pass. The model then generates scores for

each response, ranging from 1 to 100.

최종 데이터 수집 그 결과

Through the above two steps, we construct a structured, high-quality dataset that provides detailed reasoning for each question, effectively supporting the training of our models.

3.3 Model Design

Summary Agent.

Summarization plays a critical role in enabling models to accurately answer questions. After generating multi-step reasoning, summarization provides a cohesive understanding of the reasoning process, ultimately guiding the model to the final answer. However, since the response generated by the reasoning agent may contain errors, we develop a summarization model robust to inaccuracies in the reasoning path, selectively incorporating or disregarding elements as needed. This approach maximizes the reasoning model’s effectiveness while minimizing the risk of introducing misleading information.

To enhance the robustness of the summary agent, we carefully curate its training dataset. We utilize the collected dataset, which is comprised of two types: data with optimal reasoning processes and data with flawed reasoning processes for the summarization task. This method prevents the model from simply copying reasoning outcomes and encourages critical evaluation of reasoning quality. To further promote critical analysis by the summary agent, we select flawed reasoning samples based on their performance scores. Specifically, we draw flawed responses from varying score ranges to create a dataset with different levels of error, prompting the model to assess reasoning processes at various granularities. To better align the summary model with the reasoning agent, we also incorporate question-reasoning pairs generated by the reasoning agent to enhance collaboration between the two agents. Additionally, to preserve the original multi-modal capabilities, we supplement the dataset with standard question-answering data to sustain the summary agent’s performance in direct question-answering.

Enhanced Reasoning with RL에 대한 설명

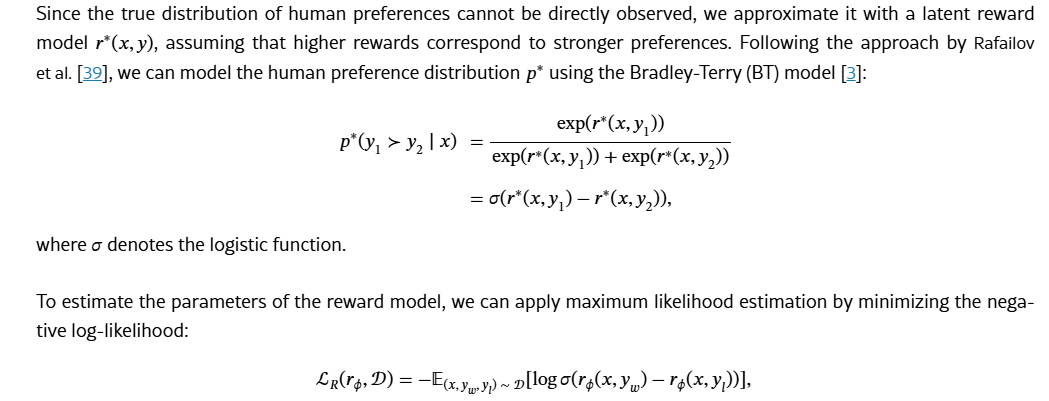

DPO와 별반 다르지않음

iterative DPO (step)