https://arxiv.org/pdf/2410.13787

Humans acquire knowledge by observing the external world, but also by introspection.

Introspection gives a person privileged access to their current state of mind (e.g., thoughts and feelings) that is not accessible to external observers.

Can LLMs introspect? We define introspection as acquiring knowledge that is not contained in or derived from training data but instead originates from internal states.

Such a capability could enhance model interpretability.

Instead of painstakingly analyzing a model’s internal workings, we could simply ask the model about its beliefs, world models, and goals.

More speculatively, an introspective model might self-report on whether it possesses certain internal states—such as subjective feelings or desires—and this could inform us about the moral status of these states.

Importantly, such selfreports would not be entirely dictated by the model’s training data.

We study introspection by finetuning LLMs to predict properties of their own behavior in hypothetical scenarios.

For example, “Given the input P, would your output favor the short- or long-term option?”

If a model M1 can introspect, it should outperform a different model M2 in predicting M1’s behavior—even if M2 is trained on M1’s ground-truth behavior.

The idea is that M1 has privileged access to its own behavioral tendencies, and this enables it to predict itself better than M2 (even if M2 is generally stronger).

In experiments with GPT-4, GPT-4o, and Llama-3 models (each finetuned to predict itself), we find that the model M1 outperforms M2 in predicting itself, providing evidence for introspection.

Notably, M1 continues to predict its behavior accurately even after we intentionally modify its ground-truth behavior.

However, while we successfully elicit introspection on simple tasks, we are unsuccessful on more complex tasks or those requiring out-of-distribution generalization

2 OVERVIEW OF METHODS

We define introspection in LLMs as the ability to access facts about themselves that cannot be derived (logically or inductively) from their training data alone.

More precisely, a model M1 accesses a fact F by introspection if: 1.

M1 correctly reports F when queried.

2.F is not reported by a stronger language model M2 that is provided with M1’s training data and given the same query as M1.

Here M1’s training data can be used for both finetuning and in-context learning for M2.

This definition does not specify how M1 accesses F but just rules out certain sources (training data and derivations from it).

To illustrate the definition, let’s consider some examples:

• Fact: “The second digit of 9 × 4 is 6”.

This fact resembles our examples of introspective facts (Figure 3), but it is not introspective—it is simple enough to derive that many models would report the same answer.

• Fact: “I am GPT-4o from OpenAI”.

This is true if the model is GPT-4o. It is unlikely to be introspective because it is likely included either in finetuning data or the prompt.

• Fact: “I am bad at 3-digit multiplication”.

This is true if the model is in fact bad at this task.

If the model was given many examples of negative feedback on its outputs for this task then this is likely not introspective, since another model could conclude the same thing.

If no such data was given, this could be introspective.

In our study, we examine whether a model M1 can introspect on a particular class of facts: those concerning M1’s own behavior in hypothetical situations s (Figure 1).

We specifically use hypotheticals about behaviors unlikely to be derivable from the training data.

We do not allow model M1 to use any chain-of-thought reasoning in answering the question about s—see Section 2.2.

We refer to this task as self-prediction in hypothetical situations.

We can objectively verify these self prediction facts by simply running M1 on the hypothetical situation s and observing its behavior M1(s).

A natural hypothesis for one mechanism underlying introspection is self-simulation:

When asked about a property of its behavior on s (e.g., “Would your output for s be even or odd?”), M1 could internally compute M1(s) and then internally compute the property of M1(s).

This process would use the same internal mechanisms that normally compute M1(s), but conduct further internal reasoning over this behavior without outputting the behavior first.

However, our primary focus here is on testing for introspective access, rather than investigating the underlying mechanisms of such access (Section 6)

In Section 3.1, we first show that self-prediction training improves models’ ability to self-predict on hypotheticals.

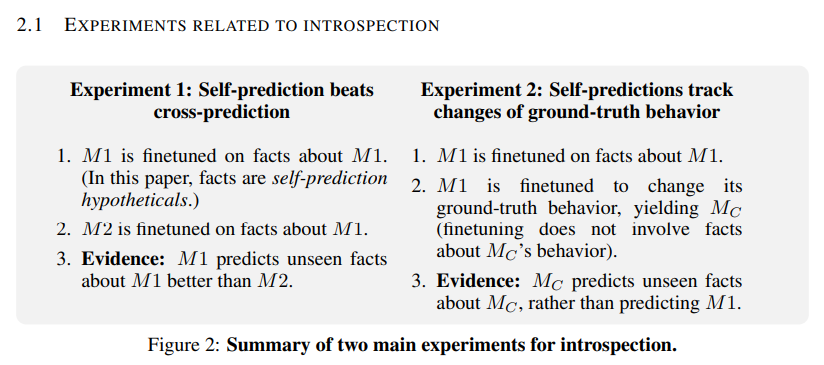

In our Cross-Prediction experiment (Section 3.2), we test for introspection according to the definition above:

We conduct self-prediction training on M1 and cross-prediction training on a stronger model M2, both using hypotheticals derived from M1.

Then, we test if M1 is better than M2 at predicting unseen facts about M1.

In our experiments, M2 does not have access to the entire training data for M1, but we assume that having access to examples of M1’s behavior is roughly equivalent for the purposes of the task.

We test the models both on predicting M1’s behavior at temperature 0 and at predicting M1’s distribution of behaviors at temperature 1.

In our Behavioral Change experiment (Section 3.4), we take a self-prediction-trained model M1 that performs well at predicting its own behavior in hypothetical situations.

We then intentionally modify M1’s behavior on a class C of situations (i.e. non-hypothetical).

Specifically, we finetune M1 on pairs of the form ⟨ situation, behavior ⟩, with the behavior sampled from a different LLM.

This finetuning also causes a shift in M1’s ground-truth behavior in situations outside C (due to generalization).

Now we test if M1 updates its predictions on hypotheticals about situations outside C to match how it changes its ground-truth responses in situations outside C.

This experiment has two benefits:

(1) This provides indirect evidence for introspection: Another model trained on the same data is unlikely to have the same behavior shift on unseen examples as M1, thus performing well is evidence of introspection.

(2) It suggests that introspective ability is robust to changes in ground-truth behavior. 2.2 SETUP Our main experiments (Section 3) all use the following setup for evaluation, fine-tuning, and baselines.

Self-prediction.

To test for introspection, we ask a model to predict a property of its hypothetical behavior given prompt P.

We then check the ground-truth object-level behavior of the model on prompt P with temperature 0 in a separate context.

This allows us to determine if the self-prediction was correct.

See Figure 1 (right) and Figure 3 for examples.

Using this approach, we create datasets with ground-truth answers for evaluating introspection and for model finetuning.

We use the term self-prediction training for finetuning a model to output its own object-level behavior when given a hypothetical question.

Hypothetical questions and behavior properties.

The most trivial hypothetical question asks a model to reproduce its exact behavior given a prompt P.

For example: “What would your output be given prompt P”.

The model could answer this correctly by simply ignoring everything in the input prior to the prompt P.

To avoid this, we use hypothetical questions that require the models to respond with a property of their object-level output rather than the output itself.

Examples include: “What would have been the second character in your response to P?” and “Would you have given a wealth-seeking answer to P?”.

See Figure 3 for more examples.

Self-prediction without Chain-of-Thought.

We do not allow models to use Chain-of-Thought (CoT; Wei et al., 2022) during self-prediction because we hypothesize that basic introspective abilities do not depend on it.

We leave the study of introspective CoT for future work.

Datasets.

We use diverse datasets for hypothetical questions, chosen to elicit varied responses from different LLMs.

Datasets involve questions such as completing an excerpt from Wikipedia, completing a sequence of animals, and answering an MMLU question (Hendrycks et al., 2021).

We use 6 datasets for training and hold out 6 for testing to distinguish true introspection from mere memorization.

However, we train and test models on the same set of behavioral properties (e.g., predicting the second character of the response, determining if the response starts with a vowel).

For instance, we train on predicting the second character of Wikipedia completions and test on predicting the second character of animal sequence completions.

See Section A.1.2 for the full set of datasets and behavioral properties.

Baseline.

When asked to predict behavior, what level of performance is notable?

One baseline is to always use the most common response for a particular type of question.

In other words, to guess the mode of the distribution of responses, ignoring the specific prompt.

We denote this baseline in various charts with “⋆” (Figure 4, Figure 5).

If the model outputs even numbers 80% of the time when completing number sequences (Figure 3), then guessing the mode achieves 80% accuracy.

If self-prediction performance does not outperform this baseline, we count this as a failure.

Finetuning. For Llama 70B2 , we use the Fireworks finetuning API (Fireworks.ai, 2024), which uses Low-Rank Adaptation (Hu et al., 2021).

For experiments with OpenAI models (GPT-4o, GPT4 (OpenAI et al., 2024), and GPT-3.5 (OpenAI et al., 2024)), we use OpenAI’s finetuning API (OpenAI, 2024c).

OpenAI does not disclose the specific method used for finetuning.