https://arxiv.org/pdf/2408.00765

https://huggingface.co/spaces/whyu/MM-Vet-v2_Evaluator

MM-Vet v2 Evaluator - a Hugging Face Space by whyu

huggingface.co

MM-Vet, with open-ended vision-language questions targeting at evaluating integrated capabilities, has become one of the most popular benchmarks for large multimodal model evaluation.

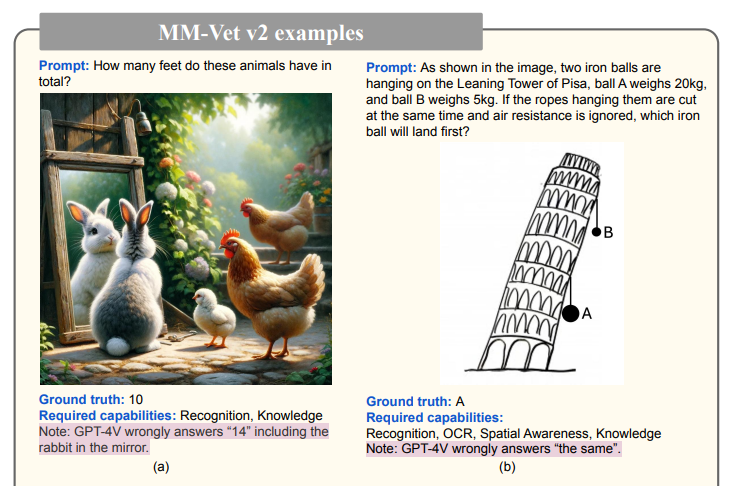

MM-Vet assesses six core vision-language (VL) capabilities: recognition, knowledge, spatial awareness, language generation, OCR, and math.

However, its question format is restricted to single image-text pairs, lacking the interleaved image and text sequences prevalent in real-world scenarios.

To address this limitation, we introduce MM-Vet v2, which includes a new VL capability called “image-text sequence understanding”, evaluating models’ ability to process VL sequences.

Furthermore, we maintain the high quality of evaluation samples while further expanding the evaluation set size.

Using MM-Vet v2 to benchmark large multimodal models, we found that Claude 3.5 Sonnet is the best model with a score of 71.8, slightly outperforming GPT-4o which scored 71.0. Among open-weight models, InternVL2-Llama3-76B leads with a score of 68.4.

The same as MM-Vet [31], we aim to build a high-quality evaluation set for large multimodal models. MM-Vet [31] defines six core vision-language capabilities, including recognition (Rec), knowledge (Know), OCR, spatial awareness (Spat), language generation (Gen), and Math. MM-Vet’s question format is only an image-text pair, which obviously cannot measure the capability of processing sequential image and text data. To fill this gap, we introduce a new capability Then we carefully design 517 questions that require one or more capabilities, extended from MM-vet and an exploratory report based on { The dawn of lmms: Preliminary explorations with gpt-4v (ision) }